The AI Rack's Unseen Engine: Astera Labs, COSMOS, and the New Economics of Interconnect

From Invisible Bottlenecks to Infrastructure Powerhouse — How Astera Labs Is Engineering the Nervous System of the AI Revolution

TLDR

· Connectivity as the New Bottleneck: Just as past computing inflection points were defined by underappreciated connectivity constraints—front-side buses, early networking, and cloud-scale links—the AI era is revealing interconnect infrastructure as the critical determinant of system performance and economic viability.

· Astera's Strategic Climb from Component to Platform: By solving foundational signal integrity issues with its Aries retimers, then expanding into memory controllers (Leo), smart cables (Taurus), and full rack-scale fabrics (Scorpio X), Astera has evolved from a niche component vendor to a core architectural decision-maker. Its COSMOS software layer transforms passive hardware into intelligent, system-optimizing platforms.

· Economic Leverage and Competitive Moat: With over 300 design wins and deep integration into hyperscaler workflows, Astera has created a high-trust, high-stakes ecosystem. Its neutral positioning between NVIDIA's dominance and emerging open standards (UALink) makes it a key enabler of performance, optionality, and control in the hyperscale AI stack—turning the plumbing into the platform.

The Ghost in the Machine: Lessons from Connectivity Bottlenecks Past

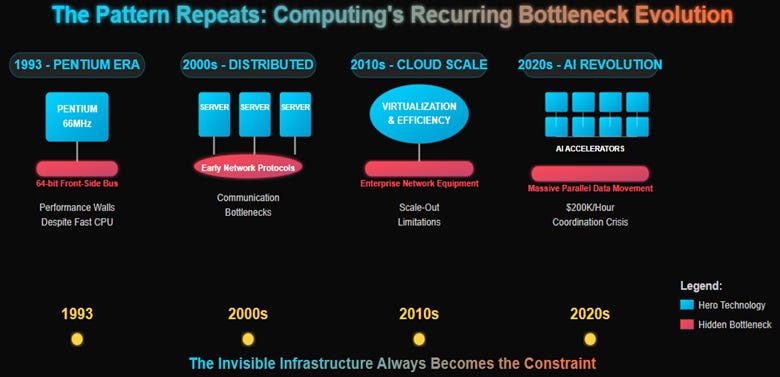

In 1993, Intel's engineers faced a puzzling problem. The company's new Pentium processor could execute instructions at 66 MHz—blazing fast for its time—yet overall system performance stubbornly refused to scale proportionally. Despite the CPU's impressive specifications, real-world applications hit mysterious performance walls that no amount of additional clock speed could overcome.

The culprit wasn't the processor itself, but something far more mundane: the 64-bit front-side bus that connected the CPU to system memory. This narrow data pathway, initially adequate for earlier generations of processors, had become a chokepoint that rendered much of the Pentium's computational power useless. Intel's engineers had fallen into a classic technology trap—optimizing the hero component while treating the connecting infrastructure as an afterthought.

This pattern has repeated throughout computing history with remarkable consistency. The transition from mainframes to distributed servers initially focused obsessively on processor performance, only to discover that early networking protocols couldn't handle the communication demands of distributed applications. The mobile revolution prioritized smartphone processors and application performance, until cellular data networks became the determining factor in user experience. The early days of cloud computing emphasized virtualization and server efficiency, only to find that traditional enterprise networking equipment couldn't scale to meet hyperscale demands.

In each case, the initial emphasis on the visible, marquee technology eventually gave way to a painful recognition that the connecting infrastructure—the unsexy, often invisible plumbing layer—ultimately determined what was possible. More importantly, the companies that recognized this transition early and solved the less glamorous connectivity problems often captured extraordinary value, becoming indispensable infrastructure providers even as the original hero technologies evolved or were replaced entirely.

Today, as artificial intelligence reshapes the technology landscape once again, we are witnessing this same dynamic unfold at unprecedented scale and speed. While the spotlight has remained fixed on GPU performance metrics and training algorithms, a quieter revolution is taking place in the server rooms of hyperscale data centers: the transformation of how AI systems move and manage data.

The AI Data Tsunami: Why Interconnect is the New Frontier

The AI revolution isn't merely about faster chips or more sophisticated algorithms; it represents a fundamental shift toward massive parallelism that demands entirely new approaches to data movement. Modern large language models like GPT-4 or Claude require thousands of accelerators working in perfect coordination, generating data flows that dwarf anything the computing industry has previously encountered. When OpenAI trains a new model or when Google develops its latest Gemini variant, they're not just running calculations—they're orchestrating a symphony of data movement between thousands of processing units that must communicate with near-zero latency and perfect reliability.

The economic stakes of this coordination challenge are staggering. A fully loaded AI training cluster can cost over $200,000 per hour to operate, with individual H100 GPUs representing $25,000-$40,000 of capital investment each. When interconnect bottlenecks prevent these accelerators from operating at full capacity, the result isn't just a technical inconvenience—it's a multi-million dollar operational crisis. For hyperscalers building these systems, ensuring optimal data flow isn't a nice-to-have feature; it's an existential business requirement that directly impacts the economics of AI development.

The challenge extends beyond raw performance to encompass entirely new architectural requirements. Traditional data center networking was designed around the assumption that most communication would flow north-south between servers and external networks. AI workloads invert this model, generating massive east-west traffic flows as accelerators within the same rack, and between racks, exchange gradient updates, parameter synchronizations, and intermediate computation results. The patterns are so different that conventional networking equipment often becomes actively counterproductive, adding latency and jitter that can cripple AI training performance.

This shift in requirements created an opportunity that most of the technology industry initially missed. While competitors remained focused on building faster accelerators or more efficient algorithms, a small team of engineers in Santa Clara recognized that the future of AI would be determined not by the raw computational power of individual chips, but by the intelligence and efficiency of the connections between them.

Astera Labs: Architecting the AI Rack's Nervous System – From Component to Platform

When Jitendra Mohan and Sanjay Gajendra founded Astera Labs in 2017, their insight was both elegantly simple and strategically prescient: as AI workloads scaled beyond single-node deployments, connectivity would evolve from a supporting technology to the primary determinant of system performance. This wasn't merely technical intuition—it was a calculated strategic bet based on decades of experience building interconnect solutions and observing how previous technology transitions had unfolded.

Phase 1: Solving Initial Scaling Pains

Their first product, the Aries PCIe retimer, addressed what appeared to be a straightforward engineering problem: as GPU clusters grew denser and more architecturally complex, the electrical signals traveling between components began to degrade over longer distances and through more complex pathways. Signal integrity issues that had been manageable in small-scale deployments became show-stopping problems as hyperscalers attempted to build the multi-thousand GPU clusters that modern AI training demanded.

Aries restored signal integrity through sophisticated analog and digital signal processing, essentially extending the effective reach of high-speed PCIe connections within AI servers. The technology was decidedly unglamorous—jitter cleanup, signal conditioning, and eye diagram optimization—but it was absolutely essential for the increasingly sophisticated AI deployments that companies like Microsoft, Meta, and Google were attempting to build.

More importantly, those early design wins provided Astera with something far more valuable than immediate revenue: deep, intimate insight into how AI infrastructure was actually evolving in practice. While other companies were developing products based on theoretical requirements or marketing presentations, Astera's engineers were working directly with the teams building real AI systems, learning about constraints, failure modes, and performance requirements that wouldn't become obvious to the broader market for years.

Phase 2: Expanding the Beachhead

This customer intimacy enabled Astera to anticipate and address adjacent problems before they became critical bottlenecks. The Taurus Ethernet smart cable modules tackled the scale-out networking challenges that emerged as AI clusters grew beyond single-rack deployments. These weren't simple passive cables, but intelligent modules that provided active signal conditioning, telemetry, and management capabilities that standard networking infrastructure couldn't deliver.

The Leo CXL memory controllers addressed an even more fundamental constraint: the "memory wall" that limited how large and sophisticated AI models could become. As language models grew from millions to billions and eventually trillions of parameters, the ability to efficiently access and manage memory across multiple accelerators became a determining factor in training performance. Leo enabled memory disaggregation and pooling, allowing AI systems to treat distributed memory resources as a unified, coherent address space.

The Scorpio P-Series PCIe fabric switches represented another evolutionary step, enabling more complex topologies that could support platforms like NVIDIA's modular MGX architecture. Rather than simple point-to-point connections, these switches allowed for sophisticated routing and load balancing of data flows within AI racks, optimizing resource utilization and enabling new deployment patterns.

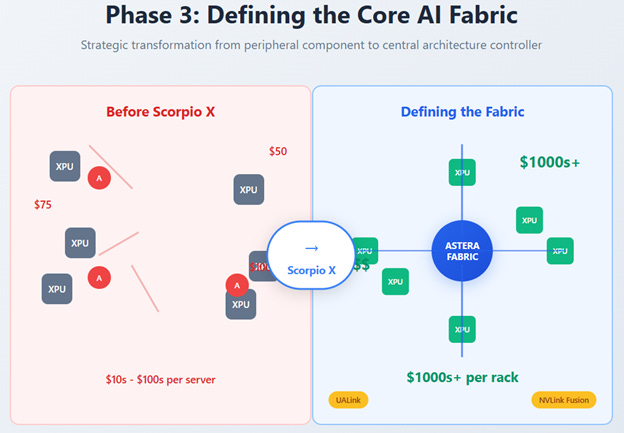

Phase 3: Defining the Core AI Fabric

The introduction of Scorpio X-Series marked a fundamental transformation in Astera's strategic positioning. Rather than simply extending or optimizing existing connections, Scorpio X enables Astera to define the core fabric architecture that ties XPUs together in scale-up configurations. This represents far more than a product evolution—it's a strategic pivot from enabling connectivity to controlling architectural decisions that determine how AI systems are built and deployed.

Scorpio X dramatically increases both the dollar content per rack and Astera's strategic importance to customers. Where earlier products might generate tens or hundreds of dollars in revenue per server, comprehensive Scorpio X deployments can represent thousands of dollars in content, while simultaneously making Astera's technology central to fundamental architectural decisions rather than peripheral optimization choices.

The company's proactive engagement with emerging standards like UALink and NVLink Fusion demonstrates the same forward-looking approach that characterized their original founding insight. Rather than simply responding to market demands, Astera is actively shaping the standards and architectures that will define next-generation AI infrastructure, ensuring their solutions remain relevant and valuable as the market evolves beyond current-generation technologies.

The Unifying Intelligence: COSMOS as Astera's Strategic Moat

Perhaps most critically, Astera recognized early that hardware differentiation alone wouldn't be sufficient to maintain competitive advantage in an increasingly crowded market. The COSMOS software suite transforms discrete silicon components into an intelligent, manageable system—essentially creating a "rack-level operating system" that provides deep diagnostics, telemetry, fleet management, predictive analytics, and system-wide optimization.

COSMOS creates genuine customer stickiness through deep integration into operational workflows. The Platform Specific Standard Product (PSSP) model enables extensive customization and co-design with hyperscaler customers, embedding Astera's technology into the fundamental operational fabric of AI infrastructure. Once deployed at scale, COSMOS becomes difficult to replace not because of technical limitations, but because of the operational knowledge and workflow integration it enables.

The software also enhances the value proposition of Astera's hardware by transforming passive components into active, intelligent systems. A PCIe retimer with COSMOS integration provides not just signal conditioning, but real-time performance monitoring, predictive failure analysis, and automated optimization capabilities that can prevent problems before they impact AI training runs. This intelligence layer creates competitive differentiation that extends well beyond hardware specifications, making Astera's solutions more valuable and harder to replicate.

Strategic Maneuvering in the AI Interconnect Ecosystem

Astera's evolution from component supplier to infrastructure architect has required navigating one of the technology industry's most complex and rapidly evolving ecosystems. The company must simultaneously maintain deep collaboration with NVIDIA—essential for accessing current AI markets—while championing open standards that could reduce NVIDIA's long-term dominance over AI infrastructure.

The Hyperscaler Imperative: Performance, Optionality, and Control

This delicate balancing act reflects sophisticated understanding of hyperscaler priorities and constraints. Companies like Microsoft, Meta, Amazon, and Google need cutting-edge performance to maintain competitive advantages in AI capabilities, but they also desperately want to avoid vendor lock-in scenarios that could limit their strategic flexibility or negotiating leverage. They've learned from previous technology cycles that over-dependence on single suppliers can create both economic and strategic vulnerabilities.

Astera's positioning as a "neutral, indispensable enabler" directly addresses these concerns. The company provides essential performance capabilities without creating new lock-in risks, actually enhancing customer optionality by enabling multiple accelerator architectures and providing abstraction layers that reduce dependence on any single vendor's roadmap.

Championing Open Standards While Enabling Proprietary Ecosystems

Astera's leadership role in the UALink consortium exemplifies this strategic approach. By driving development of an open standard for XPU-to-XPU connectivity, the company positions itself as an enabler of customer choice rather than a participant in vendor wars. UALink provides a genuine technical alternative to proprietary solutions while creating new market opportunities for Astera's interconnect expertise.

Simultaneously, the company's participation in NVIDIA's NVLink Fusion initiative demonstrates pragmatic recognition of current market realities. While UALink represents a long-term bet on open standards, NVLink Fusion enables immediate market access and revenue generation by helping custom ASIC developers integrate with NVIDIA's dominant ecosystem.

This dual approach reflects the founders' technical DNA and strategic sophistication. Rather than making binary bets on particular standards or vendors, Astera is positioning itself to provide value regardless of how interconnect standards evolve, while actively working to shape those standards in directions that favor their capabilities.

The Design Win Flywheel and Trust Multiple

The cumulative effect of this strategic positioning has created what might be termed a "design win flywheel." Astera's track record of over 300 design wins since founding has established credibility with the most demanding customers in the technology industry. In hyperscaler procurement, once a vendor proves they can execute at scale without creating operational headaches, they tend to remain on approved vendor lists for extended periods.

This operational track record, combined with deep customer intimacy and active participation in standards development, has created barriers to entry that extend well beyond pure product specifications. New entrants must not only match Astera's technical capabilities but also replicate years of customer relationship building, operational trust, and standards influence—a combination that's difficult to achieve quickly regardless of financial resources.

The Economics of AI's Hidden Engine: Valuation Levers and Scenarios

The financial implications of Astera's strategic positioning become clear when examining the transformation of AI infrastructure economics. The company has successfully orchestrated a dramatic increase in dollar content per AI rack, evolving from tens of dollars in single retimer solutions to potentially hundreds or thousands of dollars through comprehensive suites of retimers, switches, memory controllers, and COSMOS software licensing.

Astera's Financial Trajectory: From Startup to Infrastructure Provider

The revenue trajectory tells a compelling story of market validation and execution excellence. From $79.9 million in 2022 to $396.3 million in 2024, Astera has achieved the kind of growth that typically characterizes companies solving fundamental infrastructure problems during technology inflection points. More importantly, this growth has been achieved while maintaining remarkably stable gross margins around 75%, demonstrating that customers view Astera's solutions as genuinely differentiated rather than commoditized components.

The geographic revenue distribution reveals both opportunities and risks in Astera's business model. Taiwan represents the largest single market at $269.9 million, reflecting the concentration of AI infrastructure manufacturing in TSMC's ecosystem. China contributed $72.7 million in 2024, creating meaningful exposure to geopolitical tensions and export restrictions. The relatively smaller US revenue of $11.3 million suggests significant domestic growth opportunities as American AI infrastructure deployment accelerates.

However, the current valuation at approximately 30x enterprise value to revenue reflects extraordinarily high expectations for continued execution and market expansion. This multiple assumes not just sustained growth, but acceleration across multiple product lines, successful new product launches, and continued market share expansion in an increasingly competitive environment.

Scenario Analysis: Quantifying Three Possible Futures

The path forward for Astera can be modeled through three distinct scenarios, each reflecting different combinations of execution success, competitive dynamics, and market evolution:

Bull Case ($180-220): "Interconnect Linchpin" (20% probability)

The bull scenario assumes Astera successfully executes across all strategic dimensions simultaneously. Scorpio X becomes a dominant platform for scale-up AI architectures, capturing significant design wins across both NVIDIA and custom ASIC deployments. UALink gains broad industry adoption, with Astera capturing 60% or more of the emerging market through early technical leadership and standards influence.

Most critically, COSMOS evolves into a genuine software platform, generating $150-200 million in annual recurring revenue with software-like margins. This transformation would fundamentally alter Astera's business model, justifying software company valuations rather than hardware vendor multiples.

Revenue growth accelerates to 35-40% annually, reaching $1.2-1.4 billion by 2027. Gross margins expand to 78-80% as software content increases and premium products capture larger market share. A strategic stake from NVIDIA or major hyperscaler provides both validation and competitive moats, cementing Astera's position as essential infrastructure.

Base Case ($120-140): "Sustained AI Momentum" (55% probability)

The base scenario reflects solid execution within a competitive but growing market. Astera maintains its current trajectory with 25-30% annual revenue growth, reaching $900 million to $1.1 billion by 2027. The company successfully launches Scorpio X and captures meaningful market share, but faces pricing pressure from competitive responses by Broadcom and other incumbents.

Dual-sourcing becomes standard practice among hyperscalers, limiting Astera's ability to capture outsized market share but providing stable, predictable revenue streams. COSMOS provides meaningful differentiation and some software-like characteristics, but doesn't achieve the scale or recurring revenue potential of the bull scenario.

Gross margins remain stable in the 72-75% range, reflecting balanced competitive dynamics and product mix evolution. The company achieves operational leverage as revenues scale, but faces continued R&D investment requirements to maintain technological leadership.

Bear Case ($60-80): "Competitive Squeeze & Execution Hurdles" (25% probability)

The bear scenario reflects multiple execution challenges and competitive pressures converging simultaneously. Scorpio X experiences production delays or qualification issues with key customers, allowing competitors to capture critical design wins. Broadcom's bundling strategy proves effective, winning major platform decisions by offering integrated retimer and switch solutions at attractive pricing.

UALink adoption stalls or fragments across multiple competing standards, reducing the market opportunity and Astera's first-mover advantages. Silicon photonics adoption accelerates faster than expected, threatening copper-based connectivity solutions across multiple product lines.

Revenue growth decelerates to 10-15% annually, reaching only $650-750 million by 2027. Gross margins compress to 65-68% under competitive pressure and adverse product mix shifts. A broader AI CapEx slowdown in 2026-2027 reduces overall market growth, intensifying competition for available opportunities.

Key Financial Levers and Sensitivities

The wide range of potential outcomes reflects the binary nature of several critical variables:

· Gross Margin Trajectory represents the most immediate sensitivity, with each 200 basis point change translating to approximately $15-20 impact on stock price. This sensitivity reflects both product mix evolution and competitive dynamics that could either support premium pricing or force commoditization.

· Scorpio X Success or Failure could create a $30-40 differential in stock price, as this product line represents Astera's bid to transition from component supplier to architecture controller. Success validates the strategic transformation; failure suggests the company may remain confined to lower-value roles.

· Market Share Defense in core PCIe retimer markets remains critical, with a 500 basis point share loss potentially impacting stock price by $25 or more. This reflects the importance of the installed base in generating cash flows to fund new product development.

· Multiple Compression Risk represents perhaps the greatest long-term threat, as the current 30x EV/Revenue multiple assumes continued high growth. Any signal of growth deceleration could trigger compression toward 8-12x multiples typical of hardware companies, regardless of underlying business fundamentals.

The Competitive Gauntlet & Navigating the Fog of AI War

Astera's ambitious growth trajectory faces mounting pressure from multiple directions as larger, better-resourced competitors recognize the strategic importance of AI interconnect solutions. The company must execute flawlessly on an increasingly complex roadmap while defending market position against incumbents who can leverage scale, existing customer relationships, and broader product portfolios.

The Incumbents Strike Back

Broadcom represents perhaps the most immediate and dangerous threat to Astera's positioning. With its vast portfolio of networking and connectivity solutions, Broadcom possesses both the technical capabilities and customer relationships necessary to compete directly across most of Astera's product lines. More concerning, Broadcom's scale enables bundling strategies that could fundamentally alter competitive dynamics.

If Broadcom successfully packages retimers, switches, and other connectivity components into comprehensive platform offerings, it could undermine Astera's value proposition as a best-of-breed provider. Hyperscalers might find it operationally simpler and economically attractive to source complete connectivity solutions from a single vendor, particularly if Broadcom can offer meaningful cost savings through integration and volume purchasing.

NVIDIA presents a different category of competitive challenge. While the companies currently maintain collaborative relationships through initiatives like NVLink Fusion, NVIDIA's growing emphasis on controlling the entire AI stack creates natural tensions. NVIDIA's development of proprietary interconnect technologies could eventually reduce demand for third-party solutions, particularly if the company decides to more tightly integrate connectivity functions into its accelerator silicon.

The NVLink ecosystem represents both an opportunity and a threat for Astera. Participation provides access to the largest and most valuable AI infrastructure deployments, but also acknowledges NVIDIA's architectural control over these systems. As NVIDIA's integration capabilities improve, the scope for third-party value addition could diminish, potentially relegating companies like Astera to lower-value commodity roles.

Marvell Technology adds complexity through its focus on custom silicon and co-packaged optics solutions. As AI workloads push toward higher data rates and longer reaches, optical interconnect technologies could challenge copper-based solutions across multiple segments. Marvell's capabilities in both domains position it to offer integrated solutions that span the transition from electrical to optical connectivity.

The "Good Enough" Trap and Commoditization Risk

Perhaps more insidious than direct competition is the risk of market commoditization as AI infrastructure matures. Early AI deployments prioritize performance over cost optimization, creating market opportunities for premium solutions. As the technology matures and deployment patterns standardize, customers may increasingly favor "good enough" solutions that provide adequate performance at lower cost.

This dynamic has played out repeatedly in previous technology cycles. Early adopters pay premiums for cutting-edge capabilities, but mainstream adoption typically drives demand toward more cost-effective alternatives. Astera must continuously innovate to maintain performance differentiation while also developing products that can succeed in more price-sensitive market segments.

The risk is particularly acute for older product generations. As PCIe Gen6 and CXL 3.0 transition from premium to standard offerings, competitive pressure on pricing and margins could intensify. Astera's ability to introduce next-generation products (PCIe Gen7, CXL 4.0, UALink implementations) faster than competitors commoditize current-generation offerings will largely determine long-term profitability.

Hyperscaler In-Housing and the Partnership Paradox

The most existential threat may come from Astera's own customers. Amazon, Google, Microsoft, and Meta have all demonstrated sophisticated semiconductor design capabilities and willingness to develop custom silicon when it provides competitive advantages or meaningful cost savings. While Astera's PSSP model creates switching costs that discourage this development, it doesn't eliminate the risk entirely.

The irony is that Astera's deep customer engagement, while creating competitive advantages, also provides customers with detailed understanding of interconnect requirements and design approaches. This knowledge transfer could eventually enable internal development efforts that displace third-party solutions.

Astera must walk a careful line between providing enough value and customization to remain indispensable while avoiding knowledge transfer that could facilitate customer internalization. The COSMOS software platform may provide some protection by creating operational dependencies that extend beyond hardware specifications, but even software capabilities can eventually be replicated by determined customers with sufficient resources.

Geopolitical and Macroeconomic Headwinds

External factors add another layer of complexity to Astera's competitive challenges. The company's significant revenue exposure to China—over $72 million in 2024—creates vulnerability to escalating trade tensions and export restrictions. While Astera has been working to diversify its geographic revenue base, sudden policy changes could impact near-term financial performance and force costly strategic pivots.

Supply chain considerations present additional risks. Astera's dependence on TSMC for advanced semiconductor manufacturing creates single-point-of-failure vulnerability, particularly as geopolitical tensions around Taiwan intensify. The company has begun qualifying alternative foundries, but transitioning production involves significant technical and financial costs.

Broader macroeconomic factors could also impact AI infrastructure spending patterns. While current AI investment levels appear sustainable, any significant slowdown in hyperscaler CapEx could intensify competition for available opportunities and pressure pricing across all product categories.

Closing the Arc: Why Connectivity is the Product, and Astera's Strategic Value

The historical parallel that opened this analysis has reached its logical conclusion. Just as Intel's front-side bus limitations eventually forced fundamental rethinking of processor architecture, AI's connectivity requirements are reshaping how the entire industry approaches computing infrastructure design. The constraint has indeed become the product.

Astera's Unique Position in the AI Stack

Astera Labs occupies a distinctive and potentially valuable position in this transformation. The company is neither a pure-play component vendor nor a systems integrator, but something more strategically important: an architect of the intelligent fabric that enables AI systems to function as coherent wholes rather than collections of discrete processing elements.

Their hardware provides the essential physical pathways for data movement, but COSMOS software adds the intelligence layer that optimizes and manages those pathways in real-time. This combination creates value that extends well beyond the sum of individual components, enabling system-level performance optimization that wouldn't be possible with discrete, uncoordinated solutions.

The company's strategic bets on open standards like UALink, combined with pragmatic support for dominant ecosystems like NVIDIA's, provide crucial optionality for the entire AI industry. Rather than forcing binary choices between proprietary and open solutions, Astera enables hybrid approaches that balance performance optimization with strategic flexibility.

Investment Thesis: Infrastructure in Transition

Astera Labs represents a concentrated investment in a specific but compelling thesis: that as AI workloads become more sophisticated and pervasive, the intelligence and performance of interconnect infrastructure will increasingly determine success or failure for the entire ecosystem. This isn't merely a technology trend—it's an economic reality driven by the massive capital investments that AI infrastructure represents.

The current valuation reflects high expectations for continued execution across multiple dimensions simultaneously. Astera must successfully ramp new products, defend market share in existing segments, navigate increasing competitive pressure, and transform its business model toward higher-value software offerings. That's an ambitious agenda that allows little room for execution stumbles or strategic missteps.

Yet the company has demonstrated remarkable consistency in meeting these challenges. The progression from simple retimers to comprehensive platform offerings reflects sophisticated understanding of market evolution and customer requirements. The building of trust with the most demanding customers in the technology industry through flawless operational execution provides a foundation for continued growth.

Perhaps most importantly, Astera's positioning reflects deep understanding of the fundamental economics driving AI infrastructure development. As AI capabilities become more central to competitive advantage across industries, the performance and reliability of the infrastructure supporting those capabilities becomes correspondingly more valuable.

The Railroad Analogy Realized

In the AI gold rush, while countless companies focus on building better pickaxes (accelerators) or finding more gold (training data and algorithms), Astera is constructing the intelligent railroads that make the entire enterprise possible. If they succeed in becoming the de facto standard for critical elements of AI interconnect infrastructure, their strategic importance could justify valuations that appear ambitious today.

The railroad analogy is particularly apt because railroads created value not just through transportation, but by enabling entirely new economic activities that wouldn't have been possible otherwise. Similarly, Astera's interconnect solutions don't just improve existing AI capabilities—they enable new architectures, deployment patterns, and use cases that expand the total addressable market for AI infrastructure.

But railroads also faced commodity pricing pressure as the technology matured and competition intensified. Astera's long-term success will depend on continuously innovating to maintain differentiation while building sustainable competitive moats through software integration, customer relationships, and standards influence.

The company represents a pure play on infrastructure transformation during a technology inflection point—a historically profitable investment theme when executed successfully, but one that demands exceptional operational excellence and strategic vision. Whether Astera can maintain both while navigating an increasingly complex competitive landscape will determine not just their own future, but the shape of AI infrastructure for years to come.

In an era where AI performance increasingly determines competitive advantage across industries, the companies that control the data highways may indeed capture more enduring value than those manufacturing the vehicles that travel on them. Astera's bet is that intelligence applied to connectivity infrastructure creates sustainable differentiation in a world where data movement determines what's possible. It's a compelling vision, backed by impressive execution, but priced for a level of perfection that leaves little margin for error.

#AIInfrastructure #DataCenters #AsteraLabs #Interconnect #LLMs #TechStrategy #GPUs #Hyperscalers #Semiconductors #CXL #UALink #NVIDIA #AIStack #EdgeToCloud #AIeconomics #AIarchitecture #CloudComputing #TechInvesting #AIhardware #PlatformStrategy #ConnectivityMatters ALAB 0.00%↑ NVDA 0.00%↑ AVGO 0.00%↑ CRDO 0.00%↑ ANET 0.00%↑

General Disclaimer: The information presented in this communication reflects the views of the author and does not necessarily represent the views of any other individual or organization. It is provided for informational purposes only and should not be construed as investment advice, a recommendation, an offer to sell, or a solicitation to buy any securities or financial products.

While the information is believed to be obtained from reliable sources, its accuracy, completeness, or timeliness cannot be guaranteed. No representation or warranty, express or implied, is made regarding the fairness or reliability of the information presented. Any opinions or estimates are subject to change without notice.

Past performance is not a reliable indicator of future performance. All investments carry risk, including the potential loss of principal. This communication does not consider the specific investment objectives, financial situation, or particular needs of any individual.

The author and any associated parties disclaim any liability for any direct or consequential loss arising from the use of this material and undertake no obligation to update or revise

Another interesting and thought provoking article. You made a similar “software” angle argument on your Credo write up. In your opinion, how do Credo and Astera Labs measure against each other?

Excellent post, thank you !